What Computer Science Can Teach Us About Life

Insights from “Algorithms to Live By: The Computer Science of Human Decisions”

Insights from “Algorithms to Live By: The Computer Science of Human Decisions”

Algorithms to Live By: The Computer Science of Human Decisions. This title suggests a book that provides simple and concrete algorithms to religiously apply to your everyday life.

That couldn’t be further from the truth.

The Computer Science of Human Decisions raises questions for you to answer about human life by presenting parallels between computer science/mathematics and human life.

For a book on algorithmic decision-making, the advice given is often not what you’d expect. It doesn’t recommend taking every single bit of information into account to make informed decisions. More often than not, the book advises:

do what’s unknown (chapter 2)

you don’t need to be perfectly organized (chapter 3)

don’t think so hard/follow your first instincts (chapter 7)

focus on the little picture sometimes (chapter 8)

randomness is often best (chapter 9)

sometimes you can’t quite have a perfect solution, so just do your best to get as close as you can and don’t sweat it (chapters 7, 8, 9)

Each chapter uses a unique branch of computer science to comment on a different aspect of human behavior, so I’ll go through all of my insights, one chapter at a time.

1. Optimal Stopping

When you’re interviewing a pool of candidates for a job, roughly how long should you wait to get a feel of the job pool before you start sending out offers?

The answer is technically 37%, but that’s not important.

The key takeaway from this chapter is that when we decide to start doing something that requires sampling data, from hiring to house-hunting, we could all benefit from spending a little time upfront deciding when to stop looking.

In an ideal world, you would have access to all the information possible before having to make any decision, but in the real world, you often have to make decisions quickly without having access to all the data.

When to stop collecting information and start acting is one of the most important decisions we can make.

2. Explore/Exploit

You want to go out to dinner tonight. Do you go to the new place on the block or the familiar favorite?

A variation of this problem plagued mathematicians for decades.

This turns out to be a trick question.

Choosing between exploring new options and exploiting familiar favorites all comes down to time. How long do you expect to live in this city?

You should always start with exploration, but as time passes, it makes sense to transition to exploiting the fruits of that exploration.

This offers a key insight into aging. The old stereotypically follow consistent routines while the young stereotypically explore and mix things up often. This is just another example of the explore/exploit tradeoff. As you age, you can identify the routines that provide the most value, while it’s necessary to explore when you’re young.

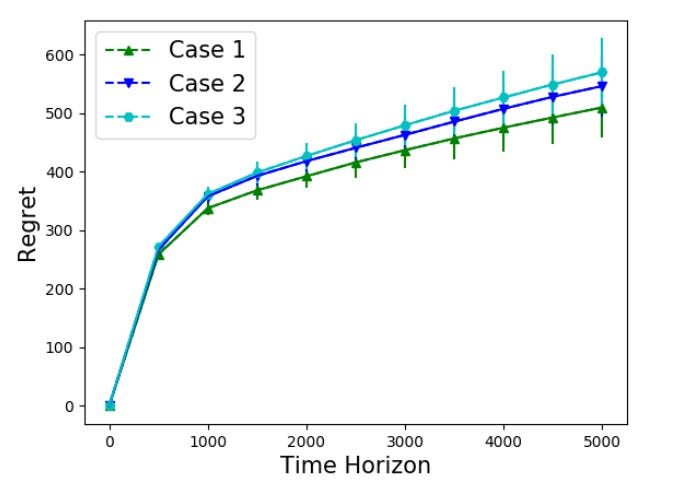

Another key insight comes from a solution to the explore/exploit problem. This is the regret-minimizing approach (always going for the highest payoff regardless of what the expected payoff is). The findings of this approach are quite applicable to life.

If you follow this approach, regret will never cease to exist, but it goes down over time.

2. Optimism is the best prevention for regret in the long run.

The framework I found, which made the decision incredibly easy, was what I called — which only a nerd would call — a “regret minimization framework.”

So I wanted to project myself forward to age 80 and say, “Okay, now I’m looking back on my life. I want to have minimized the number of regrets I have.” I knew that when I was 80 I was not going to regret having tried this. I was not going to regret trying to participate in this thing called the Internet that I thought was going to be a really big deal. I knew that if I failed I wouldn’t regret that, but I knew the one thing I might regret is not ever having tried. I knew that would haunt me every day, and so, when I thought about it that way it was an incredibly easy decision.

— Jeff Bezos on leaving a comfortable job to start Amazon

3. Sorting algorithms

Sorting algorithms are everywhere around you, and in places you might not expect. Even a sports league is a sorting algorithm to find the best team.

Though this chapter provides a wide variety of sorting algorithms humans can use for different use cases, the main purpose of this chapter is to ask, should you?

It’s easy to sort your bookshelf, desk, and closet without stopping to ask if it’s the right thing to do. The Computer Science of Human Decisions argues that you should only sort if it will save your future self significant time when searching.

Computer science shows that it’s nonoptimal (not the best solution) to sort the vast majority of the time, whether it’s your email, your bookshelf, or your papers.

4. Caching

How should you group things together?

It’s not color, age, or size.

The field of caching shows that it’s optimal to group things by how likely you are to use them in the future and keep the likeliest group the closest to yourself.

How does one predict the future? Looking at the near past.

The concept of temporal locality says that what you’ve used most recently in the past is what you’re most likely to use in the near future.

Cheekily, this shows why messy piles of mail are often optimal; you fling each new piece of mail (which is the mail most likely to be used in the future) onto the top of the pile.

5. Scheduling

Every time a machine changes tasks, it suffers a cost from switching tasks (similar to the ones humans face from attention residue). Most machines use what’s called interrupt coalescing to batch tasks together and avoid interruptions for as long as possible to reduce the number of tasks they have to switch between.

This echoes a lot of productivity advice for humans: batch tasks together and avoid email for as long as you can to reduce interruptions.

6. Bayes

There are a lot of great mathematical models in this chapter for making quick and informed decisions about the future, but one of the key points in this chapter is that the predictions you make are caused by the beliefs you hold.

If you want to make better predictions, you need to make sure you have more informed beliefs. This requires thinking critically about your news sources and where you get your beliefs from (hint: it’s usually family or close friends).

7. Overfitting

Machine Learning models often encounter the problem of overfitting, where they perfectly fit input data but are so specific that they can’t make general predictions.

The book argues that this is what can happen to decision-making. Sometimes the best way to make a good decision is to worry less about taking everything into account and going with your gut instinct after evaluating the few most important factors.

8. Relaxation

Many problems in mathematics (and life) are intractable (there is no solution).

What many mathematicians do in such cases is “relax” the problem. They remove some limitations and try to solve an easier problem that can give insight into the intractable one.

We do this in many ways ourselves. Figuring out what to do with your life is a daunting task, but a helpful prompt many people use is “What would you do if you won the lottery?”. This “relaxes” the monetary constraint in a way that can give you insight into solving the bigger problem.

9. Randomness

Sometimes the best (or only) approach to an intractable problem is using randomness. Even after taking everything into account, many decisions could go either way and sometimes you just have to be content with making a random decision and seeing the outcome.

10. Networking

Computers communicate through acknowledgments, and research shows that humans are the same way. The best way to have a good conversation is by using positive acknowledgment (nods, uh-huhs, yeahs).

Computers also have to face a painfully familiar issue, what do you do when the demands on your attention become a never-ending queue? In the modern interconnected internet, this issue has become so common that it has a name: bufferbloat. The solution is simple. Chop off the excess and don’t worry about it.

11. Game Theory

Here’s a basic intro to game theory: https://www.investopedia.com/terms/g/gametheory.asp.

One of the key insights this chapter makes from game theory is that if each individual takes the best option, it’s often worse for everyone.

Imagine there are two coworkers in a small company. The logical thing for both is to take slightly fewer vacations than the other, so they can be seen as more loyal. What this can inevitably lead to is a “race to the bottom”, where they both end up taking no vacations at all.

Similarly, in a group project with no clearly defined roles, it’s the logical choice for each person to do as little as they can and assume the others will pick up the ball. But this leads to disaster as a group.

The book argues that you can change these negative outcomes by:

Changing the strategy (don’t adopt a strategy based on anticipating the strategies of others, because they could have a strategy based on anticipating your strategy)

Changing the game (setting up clear rules that mandate vacations or that assign distinct roles to everyone)

Seek games where honesty is the dominant (winning) strategy.

12. Computational Kindness

Even when computers follow the proper algorithm, they don’t necessarily get the right results. An optimal solution is merely the best process; it doesn’t necessarily lead to the best outcome all the time (or even most of the time).

But if you follow the right process and put in all the steps, you can’t blame yourself when the results aren’t what you hoped for. This is one of two key messages of the conclusion.

The other is the idea of computational kindness. Computation is bad. Computers “want” to do as little of it as possible, and so do humans. When you’re with a group of friends that are trying to find a mutual place to go to dinner, the polite thing is to say “I’m open to anything”.

But what you’re doing is passing the ball to everyone else and forcing them to put in more effort. The computationally kind thing to do is just be honest and give a list of the specific places you’d like to go to.

I’ll sign off with a quote from the book.

The intuitive standard for rational decision-making is carefully considering all available options and taking the best one. At first glance, computers look like the paragons of this approach, grinding their way through complex computations for as long as it takes to get perfect answers. But as we’ve seen, that is an outdated picture of what computers do: it’s a luxury afforded by an easy problem. In the hard cases, the best algorithms are all about doing what makes the most sense in the least amount of time, which by no means involves careful consideration to every factor and pursuing every computation to the end. Life is just too complicated for that.

In almost every domain we’ve considered, we’ve seen how the more real-world factors we include — whether it’s having incomplete information when interviewing job applicants, dealing with a changing world when trying to resolve the explore/exploit dilemma, or having certain tasks depend on others when we’re trying to get things done — the more likely we are to end up in a situation where finding the perfect solution takes unreasonably long. And indeed, people are almost always confronting what computer science regards as the hard cases. Up against such hard cases, effective algorithms make assumptions, show a bias toward simpler solutions, trade off the costs of error against the costs of delay, and take chances.

These aren’t the concessions we make when we can’t be rational. They’re what being rational means.

All emphasis is my own.